Stories have long had the potential to transcend time, place, and feeling. The challenge has always come in translation; an emotional moment in one language loses its potency when poorly dubbed or literally translated. The rhythm is off, mouths don’t align, and the moment no longer feels spontaneous. What if we were able to bypass that barrier altogether with technology?

Thanks to lip sync AI, that dream has become a reality. Companies such as Pippit are driving this transformation of storytelling and story consumption in and between languages. With perfectly synced mouth movements, voice-over ready synced voices, and emotional believability, translation has been completed through AI. What once took studio time with actors and expensive re-shoots now can be delivered in a matter of minutes with accuracy and heart. The narrative landscape has shifted from traditional dubbing practices to a complete immersive multilingual experience.

Subtitles are fading out

Subtitles used to connect cultures, but also hijacked viewers’ attention. They had to decide whether to read or feel. Now they don’t have to. Lip sync-based videos make text overlays redundant by unobtrusively integrating voice and expression.

With this transition, immersion is total. A viewer who sees an English-dubbed Spanish drama no longer sees translation, only senses the story. The characters move and perform as if they were always intended to in that language. It’s world storytelling without accommodation.

Creators driving the lip sync revolution

Filmmakers, creators, and educators alike are adopting this change. With Pippit, they can convert any clip into a multilingual one. Rather than re-recording, they upload their video, enter translated text or audio, and allow AI to do the syncing.

Influencers employ it to reach across continents. Teachers utilize it to educate in different languages without re-shooting lessons. Even small content creators, hitherto restricted by production budgets, can now make content that resonates globally.

Where emotion meets engineering

Lip sync AI is greater than technical accuracy, it’s digital empathy. Every tiny movement, every breath, every expression is replicated to mirror human emotion. The AI discovers how the face must move when expressing anger, joy, or sadness and replicates it with authenticity.

The tech spans not only languages but emotions. It makes sure that an emotional moment in Japanese is as strong in Urdu or French. It’s emotion expressed in pixels, but it reads incredibly human. And when combined with Pippit, the workflow is seamless. Creators don’t translate, they humanize their work. They bring each scene to life in several voices, cultures, and tones.

Bridging ccultures with synchronized storytelling

Each language possesses its own rhythm, tone, and feeling. Conventional dubbing frequently loses that rhythm, but AI preserves it. By making adjustments not only to lips but also facial micro-expressions, it provides natural rhythm for each translated segment. This means global brands can communicate with empathy and accuracy. Educational institutions can make lessons accessible to non-native speakers. Filmmakers can tell one story in multiple voices, without losing a single ounce of meaning.

How pippit makes storytelling universal

Pippit makes complex multilingual creation an easy creative process. With the help of photo to video AI, it enables users to convert static images or captured videos into expressive performances in any language. The user doesn’t require editing skills or translation tools. All they need is imagination, and Pippit takes care of the rest.

Mastering multilingual magic: 3 easy steps to lip sync ai videos with pippit

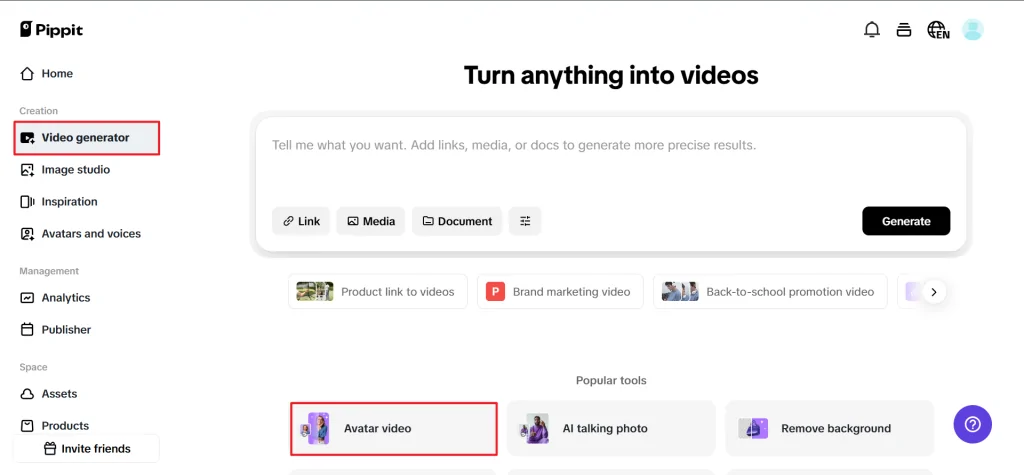

Step 1: access the video generator and avatars

Log into Pippit and get access to the Video Generator from the left-hand menu. Under Popular Tools, select Avatars and then pick or create AI avatars for your videos. The feature allows you to sync voiceovers and easily animate avatars for fun and engaging content.

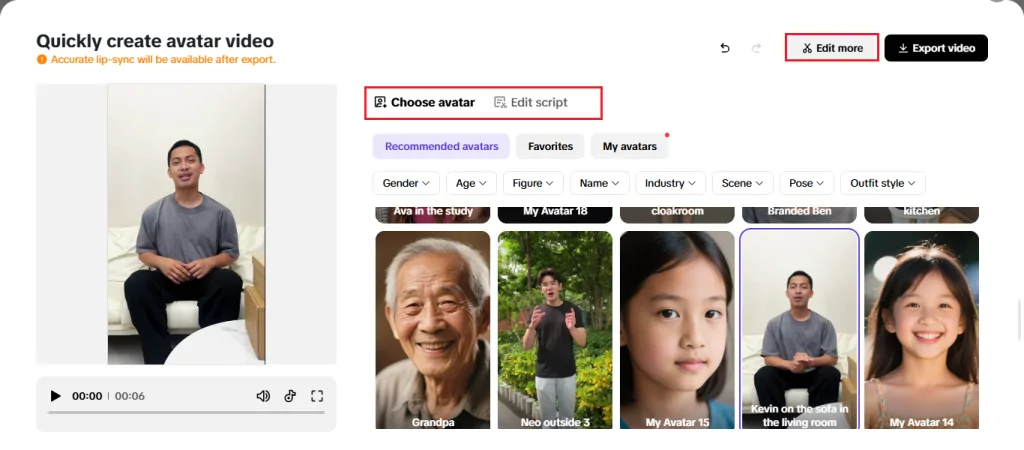

Step 2: select an avatar and edit the script

After you land on the avatar tools, click on the Recommended Avatars section and select the avatar you want. You can narrow the avatars by gender, age, industry, and many other attributes to find the best match for your video. After you pick an avatar, click Edit Script to modify the dialogue. You can enter text in multiple languages and the avatar will properly lip sync it. To enhance your video further, go down to Change Caption Style and choose captions that pair with your video’s theme and topic, which will further engage your audience.

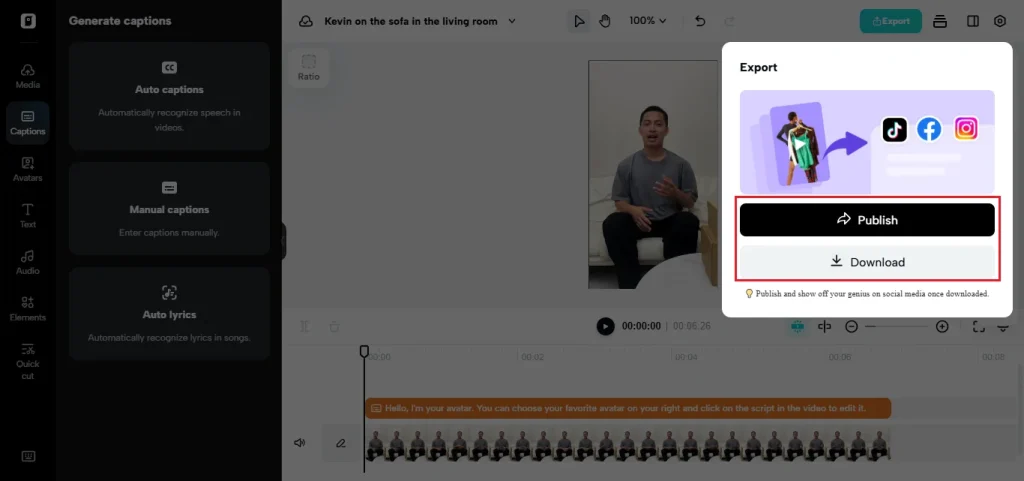

Step 3: export & share your video

After you have made the lip sync, go ahead and tap Edit More to modify your video even further. You can use the video editor to adjust the script, timing of voice, face expressions for the most appropriate stickness. You can also add text overlays, and background sounds to fancy up the edit. Once you are pleased with your video, tap Export to produce it in the format of your choice.

he rise of global digital storytellers

The future awaits those who can speak to all, and AI makes that possible. Lip sync automation takes down the language barrier for storytellers. A short Egyptian film could launch a global virality in Brazil. An ad campaign shot in English could be translated into Mandarin and disseminated to millions at the touch of a button.

Even a video agent, an animated character or digital spokesperson, can transition between languages while conveying emotion, effortlessly and seamlessly, communicating and engaging audiences everywhere. This is how AI turns content into conversation.

One story, many voices

All creators want to share their story with the world, and now the world can listen. Lip sync not only helps creators say the words, but it also helps them say the emotion.

From teachers to entertainers, this technology is transforming how humans communicate through digital media. This is storytelling that feels personal no matter the language, and authentic on every screen.

Your story deserves to be heard everywhere

With Pippit, your creativity speaks while engaging across cultures. There are the new features of Pippit that are so intuitive, they not only simplify the lip sync automation process, they do it with personality and artistic precision. Whether you are a filmmaker, an educator, or content creator, you can translate your vision across miles with authentic emotion and natural sync.